Data Architectures and Modern Solutions with Microsoft Fabric

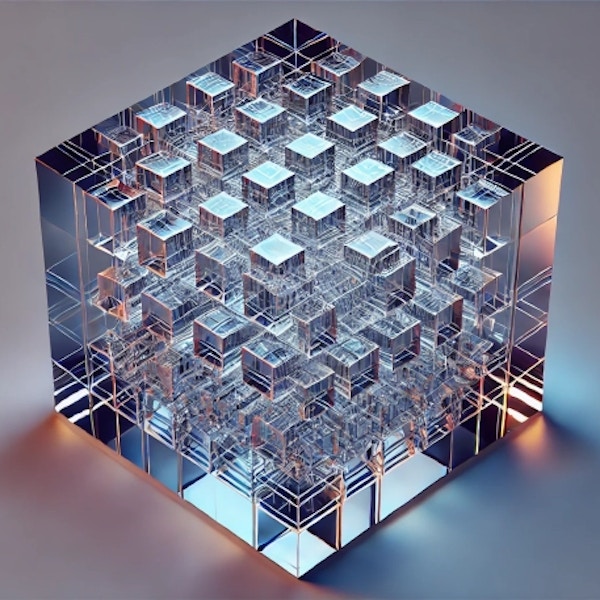

Choosing the right data architecture is essential for leveraging data assets effectively. This article explores Data Warehouses, Data Lakes, Lakehouses, Fabrics, and Microsoft Fabric's latest advancements.

Published on: October 8, 2024

In the dynamic landscape of data management, choosing the right data architecture has become increasingly vital for organizations aiming to leverage their data assets effectively. This article delves deep into the evolution of data architectures, exploring various types such as Data Warehouses, Data Lakes, Data Lakehouses, Data Fabrics, and the latest advancements represented by Microsoft Fabric. Each of these architectures offers unique capabilities to solve business challenges, providing organizations with the tools needed to make informed choices.

A significant benefit of using data warehouses is that they optimize data for reporting and analysis, isolating the burden from transactional systems, which might otherwise be overwhelmed by complex queries. As a result, performance improvements are notable for both transactional and analytical tasks. Additionally, restructuring data into well-organized tables with meaningful names allows for easier understanding by end-users, enabling self-service BI capabilities through tools like Power BI.

However, traditional data warehouses also had limitations. They struggled to accommodate rapidly growing data volumes and diverse data types, especially with the advent of the internet. This limitation set the stage for Data Lakes.

Data Lakes: A Flexible Approach

Data Lakes emerged as a flexible alternative to data warehouses, allowing organizations to store vast quantities of structured and unstructured data without predefining a schema.

Data Lakes emerged as a flexible alternative to data warehouses, allowing organizations to store vast quantities of structured and unstructured data without predefining a schema. Conceptually, a data lake functions as a large, glorified file folder capable of storing anything from CSVs to video files. This “schema-on-read” approach allows data to be ingested quickly and utilized by those with technical proficiency to transform and extract value in real-time or on demand.

Data Lakes provide a versatile solution, especially for data scientists and engineers who require access to raw data for machine learning or ad-hoc analysis. Yet, this flexibility came at a cost. Without careful organization and governance, data lakes could easily transform into unmanageable data swamps, difficult to navigate or retrieve valuable insights from. Improper management of data lakes has led many companies to incur high costs and inefficiencies, highlighting the importance of governance.

This spurred the development of the Modern Data Warehouse and later the Data Lakehouse architecture, which sought to blend the best of both worlds.

Data Lakehouses: Merging Data Lakes and Warehouses

Data Lakehouse architectures represent the natural evolution in data storage, combining the flexibility of data lakes with the structured nature of data warehouses.

Introduced in the 2020s, Data Lakehouse architectures represent the natural evolution in data storage, combining the flexibility of data lakes with the structured nature of data warehouses. Key to the Lakehouse architecture is the use of technologies like Delta Lake, a software layer that provides features like ACID transactions, schema enforcement, and support for SQL commands, traditionally not available in a standard data lake setup.

With Delta Lake, users can enjoy consistent data quality, making analytics processes simpler and more reliable. This has become crucial for companies aiming to use data lakes as their primary repository while ensuring that operational data and analytics can coexist effectively. A detailed explanation of Delta Lake’s architecture can be found in the Delta Lake Documentation by Databricks, an authoritative source widely recognized in the industry.

By implementing a lakehouse, organizations can reduce the complexity of maintaining two separate environments, eliminate redundant copies of data, and lower storage costs, as Delta format files are significantly cheaper to manage compared to traditional relational databases.

Data Fabric: The Holistic Approach to Data Management

Data Fabric aims to create a unified platform where all data assets are interconnected, regardless of where they are stored or the types of systems involved.

The concept of Data Fabric takes data integration a step further. A Data Fabric aims to create a unified platform where all data assets are interconnected, regardless of where they are stored or the types of systems involved. The goal is to weave through all organizational data seamlessly, enabling real-time processing, enhanced metadata management, and improved security.

With tools like Microsoft Fabric, this concept has become more accessible. Microsoft Fabric, integrated into Azure, provides a cross-functional data processing platform that aligns with the principles of modern data fabrics. It combines data integration, data engineering, data warehousing, data science, and real-time analytics into a single environment, eliminating silos and ensuring consistent data access across departments.

Microsoft Fabric provides a cohesive environment with features like real-time data ingestion, Power BI for visualization, and Delta Lake for efficient data storage. These features can be implemented effectively with guidance from our Data Management Services. This ecosystem ensures that data is not only available but also trustworthy, governed, and optimized for each use case, whether it be exploratory analysis or decision-driven reporting.

Leveraging Microsoft Fabric for Financial Services

Microsoft Fabric provides a comprehensive solution for integrating financial data, making it easier for institutions to gain valuable insights and improve decision-making.

Consider a financial services example where multiple transactional systems operate independently—from loan management systems to customer relationship management tools. Using Microsoft Fabric, these disparate systems can be unified into a single, integrated data environment. Through Azure Data Factory for ETL operations and Delta Lake for transactional storage, financial data can be ingested, cleaned, and stored in near-real-time.

Once ingested, Copilot can assist analysts and data engineers by providing automated insights, suggesting data transformations, or even generating Power BI reports on demand. This simplifies the process of building machine learning models to predict various business outcomes, such as customer behavior or operational efficiencies, while reducing the reliance on specialized data engineers for every report.

Power BI further allows non-technical business users to interact with these models, visualizing their predictions and running what-if scenarios to inform business strategies. Ultimately, this creates a robust data-driven culture within the financial organization, driving better outcomes across all departments.

Data Mesh: Decentralizing Data Ownership

Data Mesh is another recent trend that takes a different approach by decentralizing data ownership and placing it in the hands of domain teams. Instead of copying all data into a centralized repository, data mesh allows each domain (such as HR or Finance) to create its own data products, which are then made available across the organization via a federated system of governance.

While Microsoft Fabric can serve as the foundational technology for data mesh implementations, its use involves a significant cultural and organizational shift. Each domain needs to take responsibility for its data, and this can sometimes mean building expertise that traditionally resided within IT departments. For a deeper exploration of data mesh principles, see Zhamak Dehghani’s Data Mesh Book (2022), which is considered one of the most authoritative texts on the subject.

Conclusion: Choosing the Right Architecture

Data architectures today are not one-size-fits-all, and the choice depends heavily on the unique needs of each organization. Data Warehouses provide reliable, structured environments for reporting; Data Lakes offer flexibility for raw data; Data Lakehouses merge both, providing structured yet flexible storage; Data Fabrics bring everything together under a unified governance model; and Data Mesh gives ownership back to the data domains.

With Microsoft Fabric, organizations gain access to a platform that integrates many of these capabilities, providing seamless data management, integration, and insights generation. Whether your organization is at the stage of needing centralized control, flexibility for unstructured data, or a fully integrated, cross-functional data ecosystem, Microsoft Fabric offers a powerful solution to tackle these challenges.

For more information about how our experts can help you in implementing these architectures, please visit our Data Architecture Consulting Services.

Stay in the Loop

Subscribe to our free newsletter

* I agree to receive communications from AONIDES which I can unsubscribe at any time. For more information on how to unsubscribe, our privacy practices, and how we are committed to protecting and respecting your privacy, please review our Privacy Policy.